Improve robot grasping ability under uncertainties with deep reinforcement learning

Master Thesis

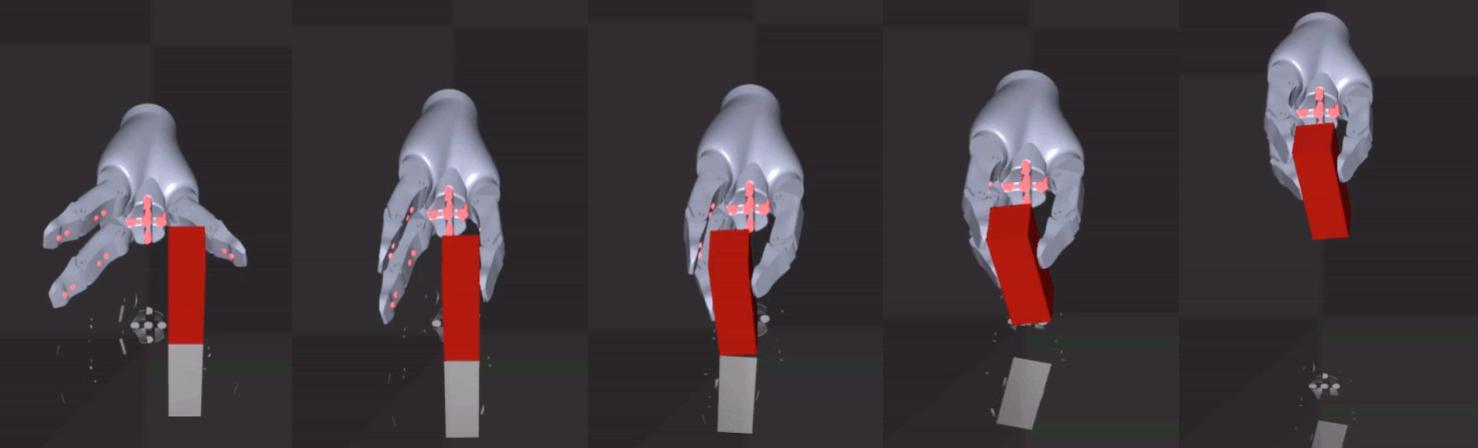

From my previous work, human studies showed that humans performed much better than robots at moving robot fingers while grasping objects under uncertainties. I took one of the human-adapted grasping strategies as expert demonstration, and applied deep reinforcement learning to teach robot grasping a wide variety of basic objects at random in-hand positions. The learning algorithm that is implemented on this problem is Deep Deterministic Policy Gradient, one of the state-of-the-art algorithms in the industry. However, expert demonstration of one object is exposed to the agent prior to learning, followed by pretraning to enhance the learning process.

This work is submitted to conference Robotics: Science and Systems (RSS), 2020.

Introduce new robotic manipulation benchmark

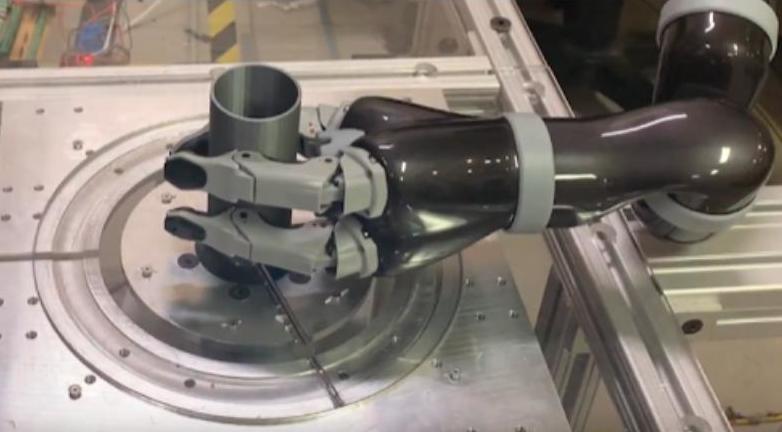

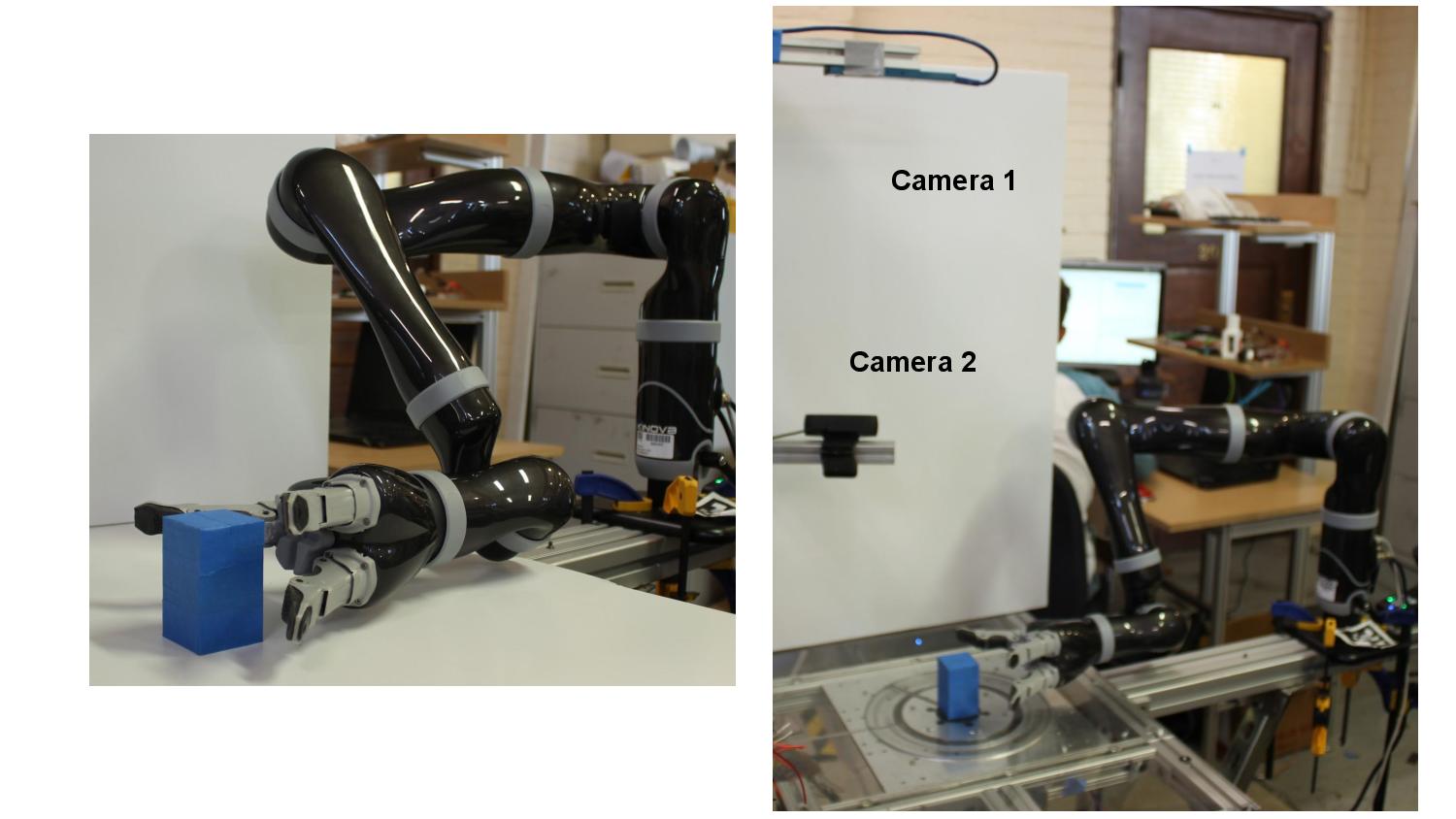

Currently there is only a few existing robotic manipulation benchmarks that evaluate both hardware design and control aspect of robot gripper. Therefore I was motivated to design a new robotic manipulation benchmark to evaluate the mechanical design of robot gripper and the control strategy of grasp planning. This overall framework of the benchmark is to place the robot hand at a pose that object is centered to the hand, and slowly move away the center to simulated "noisy" poses which are becoming increasingly challenging for the robot hand to pick up an object. We need to determine how many poses where robot could successfully pick up the target object. One of the highlights is that binary search is implemented in the benchmark to enhance the process of grasp trials. In addition, I developed a software to run the benchmark autonomously on the physical testbed we built, without having any human interference on resetting the scene for robot grasp trials.

Physical Grasp Testing Infrastructure

Robotic manipulation research usually requires human intervention for resetting the test scene, for example, placing test object back to original position at every grasp trial. We are interested to build a physical testbed that consists a robust reset mechanism embedded with multiple sensors, including RGB sensors. The main purpose is to automate robot grasp trials, and able to collect massive real-world data, further reducing the challenges brought by sim-2-real applications in robotic manipulation industry.

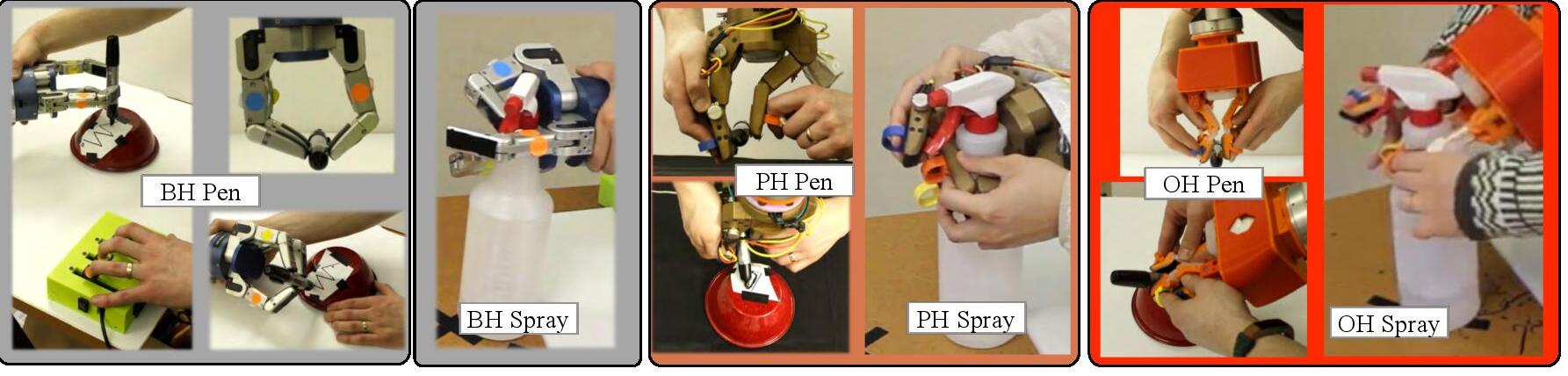

Humans and robot studies on near contact grasping

Human is good at grasping daily objects, mainly because most of the objects are designed in human-centric way. In addition, most of the grasp planner focus on finding good candidates of hand placement around an object, without consideration of the finger movement during the grasp. The reason that finger closure is critical is that the moment of contact when fingers are making with the object determines grasp success, most of the time. Therefore we introduced "Near-contact" grasping, a near grasp condition where robot hand and fingers are very close to the object. I designed the experiment, and conducted human studies on multiple robot hands to observe the grasping strategies that demonstrated by humans under environmental uncertainties (where object is not at the center of the hand). I concluded an overall of 3 primitive control strategies from the study and turned them into fine-tuned PID grasping controllers. I published the work to International Conference on Robots and Systems (IROS), and I represented our lab to present my work at the conference.

Paper link || Video || Github code

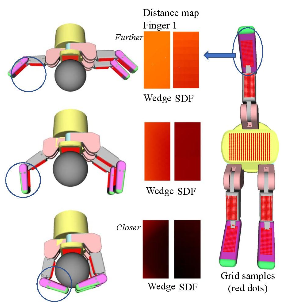

Evaluate grasp by exploiting geometric information

To evaluate how good a robot grasp is, we first need metrics for measurement and evaluation. Therefore grasp metrics come into hand. However, grasp metrics are normally involved with the physical contacts between the robot hand and object, which can be very hard to obtain due to many factors such as noise and resolution of the data that we collect from haptic sensors. We were interested in using other representations to measure the quality of a grasp. In summer 2018, I collaborated with a student working on creating a novel grasp metrics that exploit geometric relationships between robot hand and object surfaces. To summarize, we collected hundreds of robot grasp samples on multiple objects, and measured the change of distance between the robot hand and object throughout the entire grasp. We then applied machine learning to train a grasp quality classifier that is able to tell us how good a grasp is, given the distance. This work is published to International Conference on Robotics and Automation, 2019.

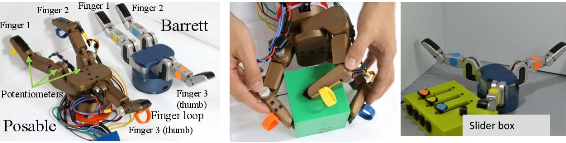

Analyze the capabilities of robot hands from human studies

Robot hand design is very spontaneous. Many robot hands are designed in ways that are only able to pick up specific objects, or to do particular tasks. Robot hand design can be stratified into multiple catagories: number of fingers, number of finger joints, actuation schemes of the robot finger, stiffness of finger joint etc. We designed human studies featuring some difficult manipulation tasks to investigate the roles that different hand features are playing in these tasks. My main responsibilities were to assist the paper lead (a PhD student) to run experiments and analyze data. This work is published to International Conference on Robots and Systems, 2018.

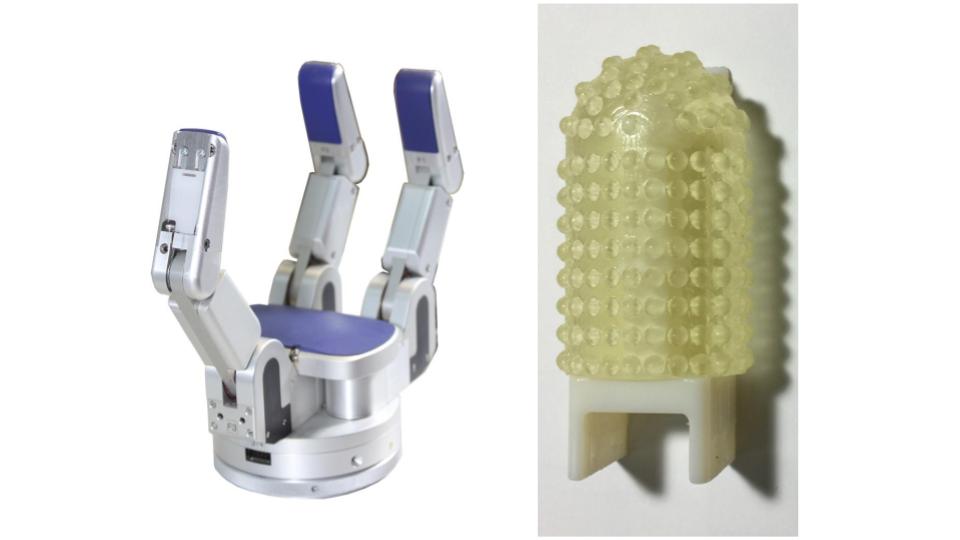

4-DOF Yale OpenHand Model O

While I first joined the research group as undergraduate research assistant, I built a open-sourced, 4-DOF robot gripper that was initially designed and built by Yale University manipulation group. This hand is called Yale Openhand Model O, that is underactuated and controlled by using 1 tendon (string) on each finger. I also developed a control interface to actuate the robot hand.

Robot Finger Pad Design

It was a senior captone design project during my senior year. Existing 4-DOF Barrett Hand could hardly pick a small and thin object, such as pen, plate etc. We designed a soft finger pads that could be easily put on the robot fingers. Soft finger pad features bumps near the finger tip, and stiff plate that acts finger "nails" to increase the contact surface area with small objects. Throughout the entire prototyping process, CAD design software SolidWorks, analysis tools such as finite element analysis, as well as state-of-the-art 3D printer were used to complete the project. We presented our work at Oregon State University's Annual Engineering Expo.